Last updated: May 21, 2024

As mentioned in my previous blog post, I said that there was still work to do to drive down complexity around the contentful content caching and static regeneration of the front end, by looking at the following architecture diagram you can see that the backend service needs to receive a webhook request from contentful, interact with Auth0 to generate a JWT and then redirect back to itself to validate that token. All to just trigger the service to refresh and cache content from the contentful.

The main reason that I chose to build this authentication mechanism into the content synchronisation flow was to allow only Contentful to be able to call the regenerate API on my backend service and stop any other actors from being able to craft requests and trigger refreshes without my knowledge.

This does work, but I'm not entirely convinced that authentication like this is truly safe, and for now, I have been fortunate that any potential chinks in this armour have been detected. Mainly, I am not aware of all of the potential flaws that could allow a bad actor to use this API, which has led me to think about an alternative solution. On top of this, this mechanism adds a lot of extra complex code to the backend service, and requires multiple requests to the backend service to just get the cache updated.

Up until recently, I was okay with the risk, as I had other priorities, but in one spare minute, I took a look at what integrations Contentful had regarding its webhooks. I had been working with subscribing to Google PubSub in a rust service that I own for my day job at loveholidays. So ideally wanted to use some kind of off-the-shelf, managed message broker, and listen to messages emitted by Contentful whenever I publish content updates. The majority of the big names, AWS and Google are either behind a Contentful paywall or are behind a vendor paywall (actually both), so I was losing hope and resigning myself to having to self-host my own RabbitMQ or Kafka instance and be essentially in the same boat regarding security as before.

And that's when I saw it.

My salvation was at hand, I had spotted a name that was all too familiar, and yet at the same time, not familiar at all, The solution I recognised instantly was

PubNub is

a highly-available, real-time communication platform optimized for maximum performance. You can use it to build chats, IoT Device Control systems, Geolocation & Dispatch apps, and many other communication solutions.

And Contentful provides a simple way to create a content update webhook that publishes content update events to a PubNub channel. With this knowledge, I just need to devise a way to subscribe to this data source.

PubNub provides SDKs and clients for an extensive range of languages and technologies, however, Rust is not part of this list. Luckily a quick search of crates.io yields a super simple crate: https://crates.io/crates/pubnub-hyper that we can grab and implement a PubNub subscriber.

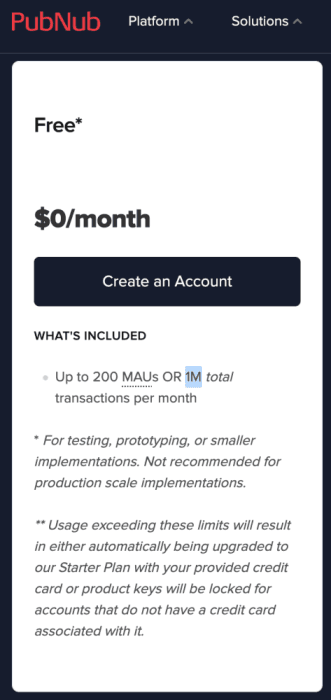

First, however, I needed to sign up and create a PubNub channel, which was super straightforward, I was able to leverage their free tier, since this portfolio is not for commercial use, and my usage is going to be well below their 1 million transactions per month.

With the PubNub channel in place, I could then go ahead and create the Contentful webhook pointed to the new channel. Now all that was left to do was implement the channel subscribe and consume content update webhook messages.

Looking at the crates docs, we can see that subscribing to a PubNub channel blocks the main thread. So leveraging Rusts fearless concurrency, I created a subscriber thread using `Tokio` that would listen for messages coming in from PubNub, and then update the content cache asynchronously.

1pub async fn subscribe (&self) -> Result<(), PubnubError> {

2 let transport = Hyper::new()

3 .publish_key(self.config.pubnub_publish_token.clone())

4 .subscribe_key(self.config.pubnub_subscribe_token.clone())

5 .build();

6

7 let transport = match transport {

8 Ok(transport) => transport,

9 Err(err) => return Err(PubnubError::TransportBuildError(err))

10 };

11

12 let mut pubnub = Builder::new()

13 .transport(transport)

14 .runtime(TokioGlobal)

15 .build();

16

17 let channel_name: channel::Name = self.config.pubnub_channel_name.parse().unwrap();

18 let mut stream = pubnub.subscribe(channel_name.clone()).await;

19

20 let cache = self.cache_regenerator.clone();

21

22 tokio::task::spawn(async move {

23 loop {

24 log::info!("⏰ Waiting for Pubnub message on channel {}", channel_name);

25

26 match stream.next().await {

27 Some(message) => {

28 log::info!("📩 Received Pubnub message on channel {} -> {:?}", channel_name, message);

29

30 let message: PubnubMessage = message.json.into();

31 match message.payload.content_type.as_str() {

32 "blogPost" => cache.regenerate_blog_cache().await,

33 _ => cache.regenerate_cv_cache().await

34 };

35

36 log::info!("🥙 Pubnub message consumed for channel {}", channel_name);

37 }

38 None => continue,

39 }

40 }

41 });

42

43 Ok(())

44}Let's break this down a bit

1 let transport = Hyper::new()

2 .publish_key(self.config.pubnub_publish_token.clone())

3 .subscribe_key(self.config.pubnub_subscribe_token.clone())

4 .build();

5

6 let transport = match transport {

7 Ok(transport) => transport,

8 Err(err) => return Err(PubnubError::TransportBuildError(err))

9 };

10

11 let mut pubnub = Builder::new()

12 .transport(transport)

13 .runtime(TokioGlobal)

14 .build();The above creates the PubNub transport object, by constructing a transport with `Hyper` with the publish and subscribe keys that PubNub requires. we then construct a PubNub client instance using the transport. Then we subscribe to our PubNub channel with gives us a stream object that we can listen to

1let channel_name: channel::Name = self.config.pubnub_channel_name.parse().unwrap();

2let mut stream = pubnub.subscribe(channel_name.clone()).await;With all this set up, we can now create a new thread and listen for messages emitted from Contentful

1tokio::task::spawn(async move {

2 loop {

3 log::info!("⏰ Waiting for Pubnub message on channel {}", channel_name);

4

5 match stream.next().await {

6 Some(message) => {

7 log::info!("📩 Received Pubnub message on channel {} -> {:?}", channel_name, message);

8

9 let message: PubnubMessage = message.json.into();

10 match message.payload.content_type.as_str() {

11 "blogPost" => cache.regenerate_blog_cache().await,

12 _ => cache.regenerate_cv_cache().await

13 };

14

15 log::info!("🥙 Pubnub message consumed for channel {}", channel_name);

16 }

17 None => continue,

18 }

19 }

20 });Since `stream.next().await` blocks the thread, we need to move the execution of this work into a new thread, that's where `tokio::task::spawn` comes in where we pass an async block that waits for and processes messages. Once a message comes in, we unwrap the message optional and deserialize the message payload. Now we have access to the message payload, we can choose which portion of the cache to update. In this case, if we receive an update event for a blog post, we update the blog cache, otherwise, we update the cv cache.

We deserialize the message payload by implementing the `From` trait for my `PubnubMessage` struct, With the following in place we can transform a `JsonValue` (the `PubNub-Hyper` crates JSON representation)

1#[derive(Deserialize, Debug, Clone, Default)]

2#[serde(rename_all = "camelCase")]

3struct PubnubMessagePayload {

4 // entity_id: String,

5 content_type: String,

6 // environment: String

7}

8#[derive(Deserialize, Debug, Clone, Default)]

9#[serde(rename_all = "camelCase")]

10struct PubnubMessage {

11 // topic: String,

12 payload: PubnubMessagePayload

13}

14impl From<JsonValue> for PubnubMessage {

15 fn from(value: JsonValue) -> Self {

16 match serde_json::from_str::<PubnubMessage>(&value.dump()) {

17 Ok(value) => value,

18 Err(err) => {

19 println!("Error deserialising: {:?} -> {:?}", err, value);

20

21 PubnubMessage::default()

22 },

23 }

24 }

25}With the above in place, we get the trait `Into<PubnubMessage> for JsonValue` implemented for free and so we can then transform a `JsonValue` into a `PubnubMessage`, with the following:

1let message: PubnubMessage = message.json.into()With the Contentful webhook in place publishing content update messages to PubNub, and my backend service subscribed and listening to these messages we can now easily and simply update the cache safely and securely. the following log shows the order of events that occur now when a message is received.

Now that we can detect content changes and synchronise accordingly, we now remove the Auth0, request redirection and JWT validation code from the backend service code base, On top of this I was able to completely remove the services REST API, since it was no longer needed, shrinking the attack surface of the backend dramatically! Now the service only offers a graphql query endpoint, making the client-facing portion of the service completely read-only! These changes can be seen in this commit.

This portfolio's system architecture now looks something like the following. We no longer need to handle authentication and can completely cut out Auth0, and remove the complexity of redirecting and validating JWT tokens. The service now reaches out and subscribes to a managed message broker, whose authentication is handled by externally PubNub. Overall we have decreased the number of steps to ensure the state of the system is up to date and improved the security of the system.

I think the next potential step we could take would be to remove the need for the backend service to call the Contentful API directly after a content update message is received. It would be great if the webhook message payload would supply the backend service with the Contentful entry that had changed, allowing the backend service to update the single entry without gathering and processing a new snapshot of the CMS.

All in all, the goal was achieved! 🙌