Last updated: June 2, 2023

I noticed that this website in production took an inordinate amount of time to load. This seemingly came out of the blue, as this wasn't always the case. This had started after the Vultr instance hosting my Website was forced to be decommissioned in place of a new one running with an updated OS and security patches. I could see that this egregious slowdown was not occurring when running the website locally, so there must have been some foo happening in production. So I set out to track the issue down.

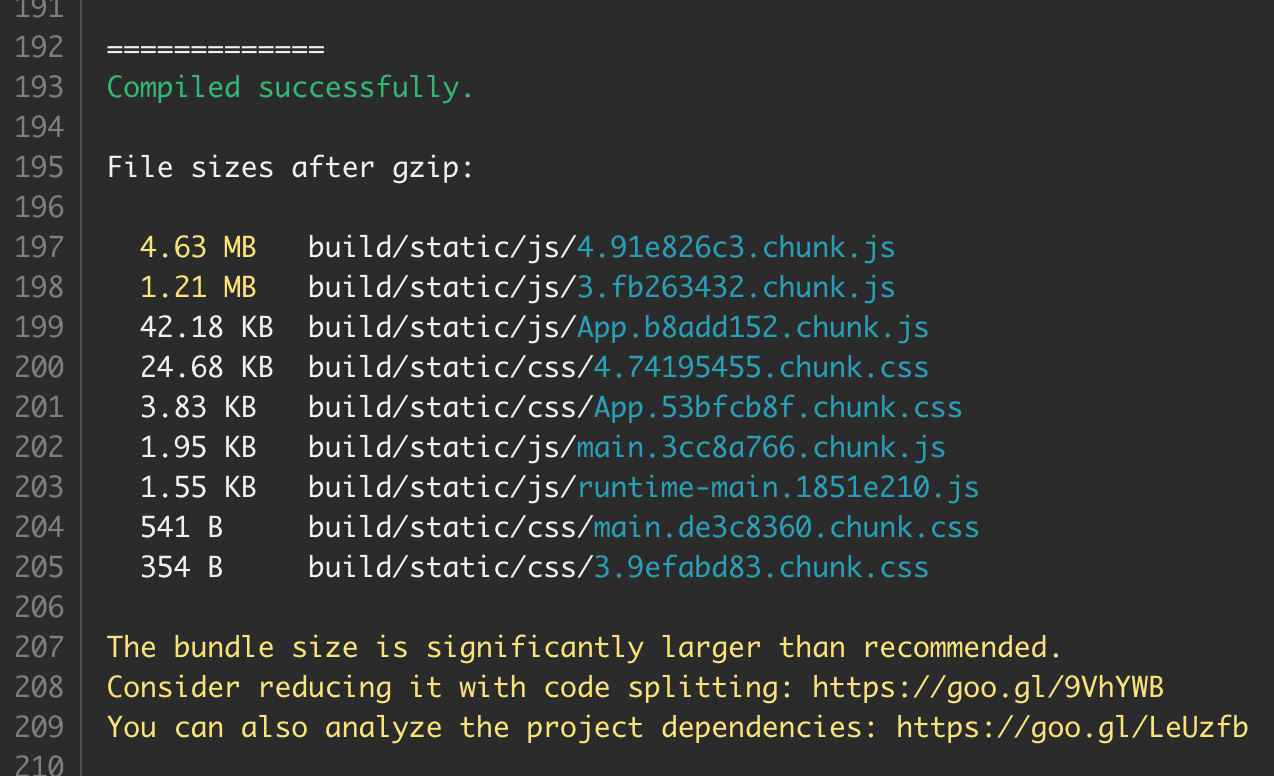

I initially thought the portfolio JavaScript bundle was too large and was causing the slowdown. The output of my frontend build was remarking that the compiled bundle was quite large and that I should make sure of Reacts code splitting functionality. Since things were running perfectly fine locally, the JavaScript bundles loaded from the production may have had a hand in the latency as the files needed to be pulled across the internet rather than directly from local storage. This hypothesis was incorrect and did nothing to solve the root cause of the issue. But utilizing Reacts code splitting was an excellent measure to reduce the size of the JavaScript bundles loaded in parallel in the browser.

Looking at the responses to the backend service requests, I could see that the calls to my backend service were taking anywhere between 15 to 30 seconds each! I first made minor modifications to my front end to make these requests concurrently, reducing the total latency to the longest-running request. But this still did not fix the actual root cause.

I looked further into the backend and could see that the requests made to Contentful were the issue! Since the latency seen in production wasn't seen locally, Without much understanding of the issue I thought about using Redis to cache the latest content from Contentful, just to see if I could get a quick fix in place and leave it at that. I didn't move forward with this solution, as again this wasn't actually solving the root cause and was just putting a stopgap in place to mitigate the slowdown.

I added extra logging to surface the timing on each Contentful request, I wanted to understand why the requests were taking so long. I contrasted the timings from the production server against the timings of CURL requests I was making to Contentful locally, I could see there was certainly something environment-based happening, since locally requests to Contentful were taking on average 100-200ms locally, but were spinning their wheels on the production machine for nearly 15 seconds at a time.

I needed to see where on the production server the latency was experienced, was it an issue with the Rust crate Reqwest in my backend service or an issue at the network level? To rule out backend service implementation it made sense to check the network level lead first. I crafted CURL requests to Contentful while SSH'd into the production machine, and could see the same latency as was experienced through the front end. I now had a proper question to ask, why were pure REST requests to Contentful slow on production and not locally?

After exhausting each component of my deployment, I finally thought to consider the operating systems that my local machine and the production server are using. My local machine at the time was Windows and the production machine was Ubuntu. I then needed to understand how Windows and Ubuntu resolve hosts and how they cache DNS records for lookup. Reading this ClouDNS article, it appears that Windows builds a DNS cache whereas Linux does not:

"There was no OS-level DNS caching, so it is a bit harder to display it. Depending on the software you are using, you might find a way to see it. For example, if you are using NSCD (Name Service Caching Daemon), you can see the ASCII strings from the binary cache file. It is located in /var/cache/nscd/hosts, so you can run “strings /var/cache/nscd/hosts” to display it.If you are using Ubuntu 20.10, Fedora 33, or later, Systemd is responsible for the DNS."

I am not using the NSCD, my Vultr instance is using Ubuntu 22.04 x64, but I am not using Systemd to handle the DNS cache, mainly out of simplicity. This means that every request made from the Ubuntu machine resolves the hostname in flight every time, as the mapping between hostname and IP is not cached for reuse!

Now that I knew that the latency was caused by Ubuntu not building a DNS cache, I could either configure Systemd to handle the DNS cache for me or I could turn to Ubuntu's host's file. For simplicity and brevity, I chose to manually configure the mapping between the Contentful API hostname with its corresponding static IP addresses in the host's file, so that I would not need to rely on the hostname to be resolved en route.

1151.101.130.49 cdn.contentful.com

2151.101.66.49 cdn.contentful.com

3151.101.194.49 cdn.contentful.com

4151.101.2.49 cdn.contentful.comContentful appears to use static IP addresses underlying their API hostname, plugging this mapping into Ubuntu's host file I was able to see the request latency essentially evaporate! Success! I mainly now need to keep my eye on the latency of the backend calls to detect if / when Contentful rotates their API IP addresses.

Through this journey, I was able to track down why requests to Contentful were so slow, adding the hostname mapping to the host file as a great quick win that did not require any hardcore refactoring or backend changes. it was essentially an operating system problem.

All in all, I'm glad I was presented with this issue, it was essential to keep digging until the root cause presented itself. With the root cause in hand, I was able to implement a targeted fix without putting stop gaps, bodges and extra infrastructure in place to try to short circuit the fix. 🎉